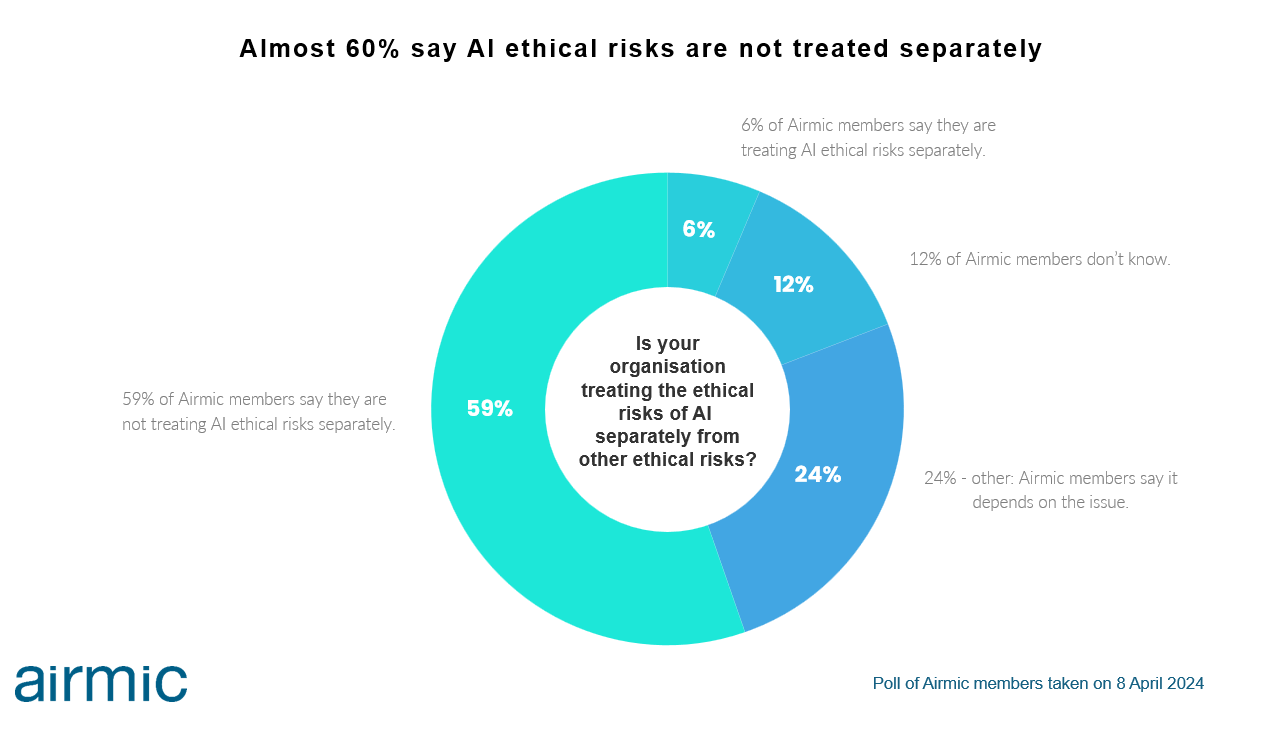

ALMOST 60% SAY AI ETHICAL RISKS NOT TREATED SEPARATELY

Findings open debate on managing AI alongside other ethical risks

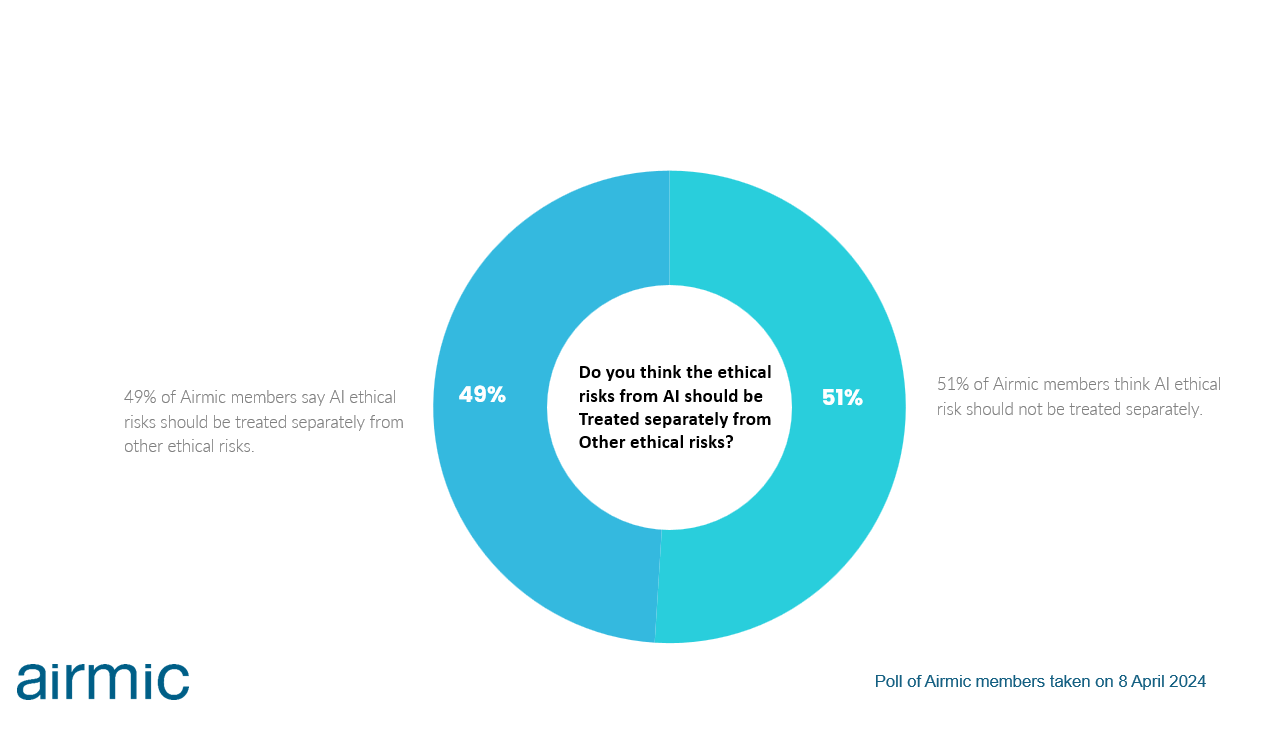

Organisations are treating the ethical risks from the use of artificial intelligence (AI) together with other ethical risks, said 59% of respondents in an Airmic survey this week. Respondents were almost evenly split as to whether they thought AI ethical risks ought to be treated separately.

As AI applications are rapidly being adopted by organisations and individuals, respondents thought it sensible to give AI ethical risks extra visibility and attention within the risk management frameworks and processes. This comes as more bodies are calling for organisations to establish AI ethics committees and separate AI risk assessment frameworks, to steer the organisations through contentious situations relating to ethics.

Julia Graham, CEO of Airmic, said: “The ethical risks of AI are not yet well understood and additional attention could be spent understanding them, although in time, our members expect these risks to be considered alongside other ethical risks.”

Hoe-Yeong Loke, Head of Research, Airmic, said: “There is a sense among our members that ‘either you are ethical or you are not’ – that it may not always be practical or desirable to separate AI ethical risks from all other ethical risks faced by the organisation.”

“What this calls for is more debate on how AI ethical risks are managed. Regardless, organisations should carefully consider the implications of potentially overlapping risk management and governance structures.”

If you would like to request an interview and or have any further questions, please let me know.

We will be sharing the results of the Airmic Big Question with the press weekly.

You can also find the results here.

Media contact: Leigh Anne Slade

Leigh-Anne.Slade@Airmic.com

07956 41 78 77