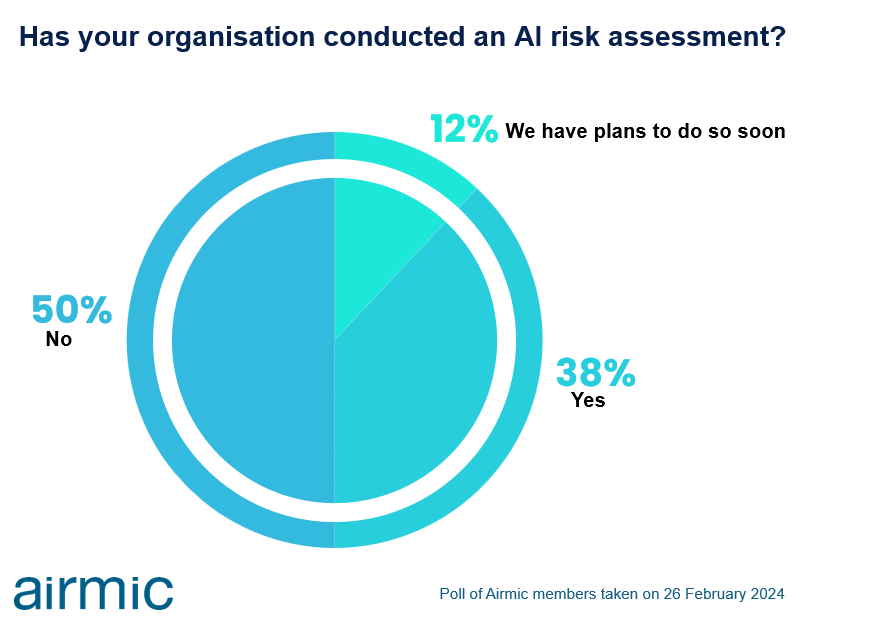

50% OF ORGANISATIONS HAVE NOT CONDUCTED AI RISK ASSESSMENTS

Current risk assessment tools and techniques ill-suited for AI risks

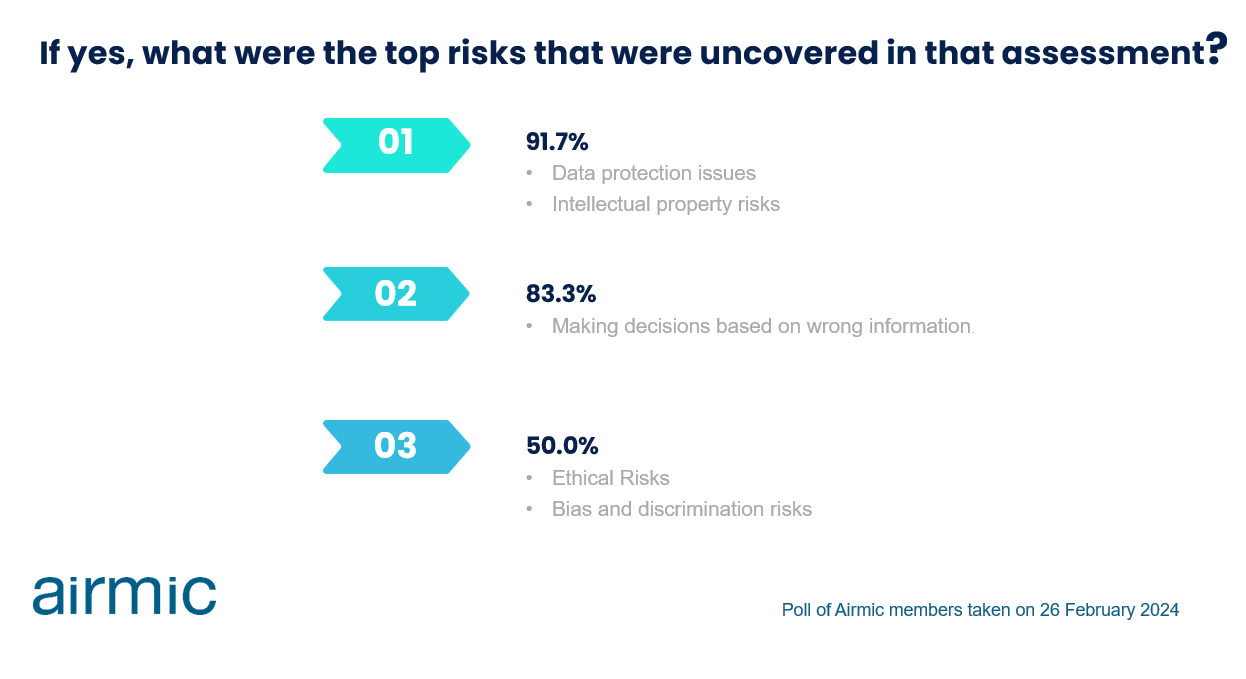

As many as 50% of organisations have not conducted artificial intelligence (AI) risk assessments, a survey of Airmic members this week has revealed. Data protection and intellectual property issues emerged as the top risks.

Julia Graham, CEO of Airmic, said: “Research indicates that most organisations, when they do conduct an AI risk assessment, are using traditional risk assessment frameworks better suited to the pre-AI world of assessment – this is an area of risk management still in its infancy for many.”

In research conducted last year by Riskonnect, only 9% of companies globally said they are prepared to deal with the risks associated with artificial intelligence.

Hoe-Yeong Loke, Head of Research at Airmic, said: “Many governments are just beginning to develop policies and laws specific to AI, while those that have are competing to put their stamp on how this emerging technology will develop. Understandably, there is no universally accepted model for assessing AI risk, but risk professionals can look to recent published standards such as ISO/IEC 23894:2023 Artificial intelligence: Guidance on risk management.”

Airmic will be looking to produce an updated methodology for AI risk assessments, in consultation with Airmic members and the industry.

If you would like to request an interview and or have any further questions, please let me know.

We will be sharing the results of the Airmic Big Question with the press weekly.

Media contact: Leigh Anne Slade

Leigh-Anne.Slade@Airmic.com

07956 41 78 77